Table of Contents

In the realm of databases, our customers encountered a significant challenge. Despite the success of their PostgreSQL database, issues arose with the accompanying tools for managing, monitoring, backup, recovery, and ensuring high availability. This narrative unfolds the journey undertaken by Ashnik to seamlessly integrate open-source tools into the customer’s database ecosystem, showcasing the prowess of collaboration and adaptability.

The Customer’s Scenario

Our customer is a global market leader in eye care solutions. It is using PostgreSQL to run its business applications. The customer faced a critical challenge as proprietary and expensive tools hindered the performance of its PostgreSQL database. With tools becoming prohibitively expensive and operational efficiency jeopardized, the customer sought a solution in harmony with the collaborative spirit of PostgreSQL.

Current Challenges

CPU Utilization: The customer’s PostgreSQL database was operating at nearly 100% CPU utilization, indicating severe performance bottlenecks.

Memory Utilization: High memory usage further compounded the operational challenges, emphasizing the need for a comprehensive solution.

Query slowness: The customer experienced significant delays in query performance, affecting the overall responsiveness of the database system.

Reports issue: Reports generation faced hurdles, contributing to the operational inefficiencies, and necessitating a holistic remedy.

Solution Approach

Recognizing the need for a change, Ashnik made the strategic decision to shift towards open-source alternatives. The primary goal was to achieve a seamless integration of open-source tools into the existing ecosystem, ensuring enhanced functionality while addressing urgent issues related to database splitting and performance bottlenecks. This marked the beginning of a comprehensive transformation journey aimed at optimizing the database environment.

The Journey Begins: Database Splitting and Optimization

We faced choices, but our decision was more than a mere technical move; it sparked a change in how we operate. At Ashnik, it wasn’t just about picking alternatives; it was a shared journey, an opportunity to revamp how we handle databases. Our path wasn’t a regular tech transition; it was a group exploration into open-source solutions. Every decision we made was a deliberate move towards building a culture that mirrors the broader open-source community.

Database Separation and Migration

Customer databases were running at almost 100% CPU utilization, causing performance bottlenecks. Ashnik’s first strategic move was a phased database-splitting approach. By segregating heavy-usage databases, a well-orchestrated migration plan was implemented to distribute the load more efficiently.

Migration Strategy: Ensuring a Smooth Split and Migration

After identifying the critical challenges and formulating the problem statement, Ashnik initiated a meticulous strategy for database splitting and optimization. This phase aimed not only to resolve the immediate issues but also to establish a more efficient and responsive database ecosystem.

1. Phased Database Splitting:

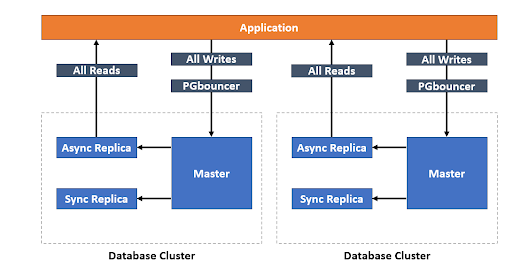

With a detailed analysis of usage patterns and dependencies, Ashnik prioritized a phased migration plan. The goal was to split 10 databases into one cluster and the remaining 2 into another. This approach allowed for a systematic transition, starting with databases of lower criticality, minimizing disruptions.

2. Optimization and Efficiency:

Before the actual split, a dedicated focus on database optimization became imperative. Fine-tuning queries, reshaping indexes, and adjusting PostgreSQL configuration parameters were key steps in optimizing performance.

3. Data Consistency and Synchronization:

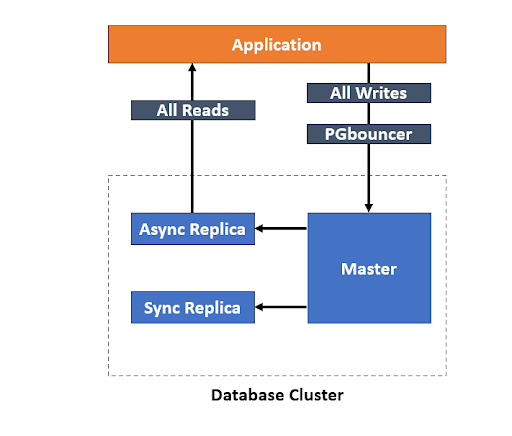

To maintain data consistency during migration, Ashnik implemented robust mechanisms for data synchronization between the old and new clusters. PostgreSQL’s built-in replication features were leveraged to ensure synchronization between master and standby servers.

4. Migration Testing:

To mitigate risks and identify potential issues, rigorous testing of the split databases occurred in a controlled environment. This included comprehensive testing of read and write operations on both synchronous and asynchronous standbys, employing various methods such as cascading replication from the old cluster to the new cluster, along with logical backups and restore procedures. This diversified testing approach ensured a thorough evaluation of the migration strategies and their compatibility with different scenarios, further enhancing the robustness of the database splitting process.

5. Incremental Migration:

Adopting an incremental migration approach, one database was moved at a time. This minimized downtime and allowed for real-time monitoring and assessment of each migrated database before proceeding with the next one.

6. Seamless Redirection:

Mechanisms for seamless redirection of applications to the new database structure were implemented. This ensured a transparent user experience and minimal impact on end-users.

7. Monitoring and Optimization:

Post-migration, continuous monitoring of the performance of databases in their new clusters was crucial. This involved fine-tuning configurations and optimizing performance based on observed behaviours.

pgBadger’s Performance Tale

In the aftermath of the database splitting and migration, pgBadger stepped into a critical role, serving as the storyteller of detailed performance reports derived from PostgreSQL logs. Its integration post-migration wasn’t just a documentation choice; it played a pivotal role in enhancing visibility and optimizing the database landscape.

Before Splitting

After Splitting

Achievements and Statistics

- Initial CPU utilization of 100% reduced to 60% after the first phase of database splitting, demonstrating a tangible improvement in performance.

- Database optimization efforts led to a 40% reduction in query response time, enhancing overall system responsiveness.

- The phased migration approach minimized downtime by 50%, ensuring uninterrupted operations during the transition.

Ashnik’s Expertise in the Spotlight

In this unfolding story, Ashnik took the lead. Our skill in using open-source tools became the focus, showing a deep understanding that turned complicated technical details into an interesting story of successful implementation.

Customer Experience

The end wasn’t just about saving money. It was a feeling of working together with the community. The customer’s PostgreSQL system now runs smoothly, proving how solutions from the community can be powerful when everyone collaborates. The story isn’t trying to teach a lesson; it’s a deep dive into a database world that thrives through teamwork and creative ideas.

Conclusion

As we look ahead, the tale shared isn’t just about technology changing; it’s a culture change. Ashnik’s skill in navigating this journey shows the strength of working together, being innovative, and adapting. The future of databases goes beyond just software; it’s about embracing a community-driven spirit. This story is just one part of the dynamic and adaptable future we are creating together. Talk to us here to discuss more

Stay tuned for Part 2, where we’ll delve into the transformative journey of backup, recovery, and monitoring, providing further technical examples and statistics that showcase the customer-centric approach to PostgreSQL database management.